You are here

Trees and bugs in computers

Scientists often rely on sensors to collect data. However, sensors can go wrong due to various surprising yet possible reasons. Have you ever thought, what you would do if you lost a couple of hours’ data because a lightning destroyed the sensor? Also, your sensor may freeze during winter time due to low temperature. Moreover, certain sensors require calibration every year because of inexorable sensor drift. As a result, raw data is usually not very reliable before some special processing, or “quality control.” This summer, I worked with Dr. Emery Boose and Prof. Barbara Lerner on quality control of sensor data and data provenance tracking. In this research, we considered several possible quality control techniques including calibration, detection of irregular values, and gap filling of the missing data. Different quality control methods will generate different versions of the processed data. When datasets grow larger and time passes, it is very likely to forget which set of quality control actions have been applied to a particular version of the processed data. As a result, recording the data provenance, or the history of data, is essential.

A Data Derivation Graph (DDG) stores detailed information about how the processed data is derived from the raw data in a special data structure called “tree”. To accomplish this goal, we built a process simulating the initial process of the raw data and reprocessing of the data when some quality control techniques improve. More specifically, we developed R programs for detecting and fixing quality control problems. Then we implemented theses R programs in both Keper and Little-JIL, two pieces of software that can record data provenance, to build identical processes and compared the DDGs generated by them. We found that although Kepler is easier to use for scientists with little programming background, Little-JIL stores more information in the DDG and produces a more legible and comprehensive graph.

Besides programming, we usually went to the 6 stream gauges in the Prospect Hill area to collect data once a week. We downloaded the data from the data logger at each stream gauge to a palm device. In addition, we took the measurements in the stream manually to check with the sensor data, detecting potential sensor failures. Certainly, there are a lot of mosquitoes in the stream area. But we appreciate this experience because it allows us to understand how sensors work in the field.

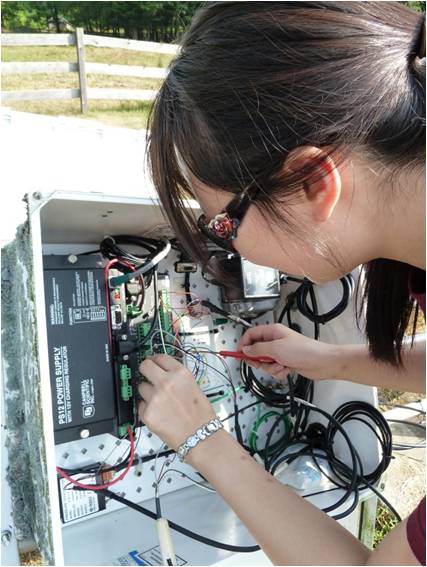

Besides the hydrological data, we also worked with the data from the Met Station. The sensors at the Met Station need to be calibrated about every two years. This summer, Mark VanScoy took us to the Met Station to take off the sensors and replace with calibrated ones. We tilted down the tower and opened the white box to unplug and re-plug those colorful wires connected to the sensors. Luckily, the cows did not bother us because they were put into the lower part of the pasture on purpose.

We spend most of our time sitting in the common room programming. It becomes very stressful when it comes to debugging. Bugs in computer programs are as annoying as, or maybe more annoying than the bugs in the field. Computer bugs refer to any problem or error in the program. However, life becomes cheerful when we have Snickers, Prof. Lerner’s super cute Bernese mountain dog, by our table. She brings so much joy and deserves all the love in the world.